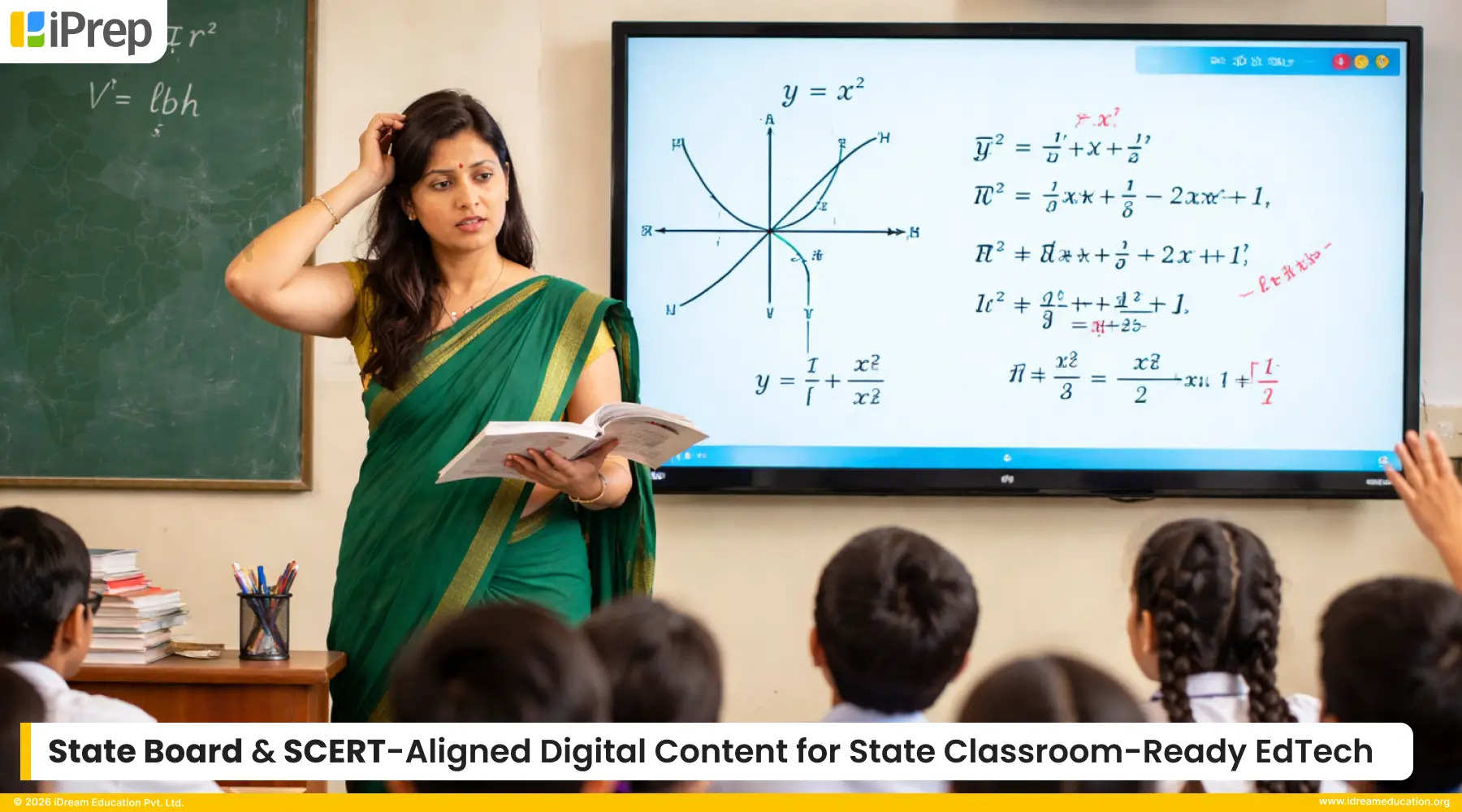

Across India, government schools are witnessing a rapid push towards digitisation. Smart classrooms, ICT labs, digital libraries, tablets, and large interactive displays are becoming increasingly common. Significant public funds are being invested to modernise infrastructure and bridge the digital divide. On paper, the intent is clear: transform teaching-learning through technology.

However, in most implementations, digitisation has stopped at hardware.

The most critical elements that actually define Digital Education – structured learning solutions, high-quality digital content, Learning Management Systems (LMS), Personalized Adaptive Learning (PAL), teaching–learning analytics, and outcome-focused reporting dashboards – are conspicuously absent. The emphasis has largely remained on procurement and installation, rather than on what learners and teachers are expected to do with the technology.

As a result, classrooms reflect fragmented practices.

- In some schools, teachers are asked to play recorded videos of other teachers.

- In others, PDFs or DIKSHA content are copied onto pen drives with no LMS, no tracking, and no insight into usage or learning.

In several large-scale government projects, there is no defined content, LMS, PAL, or learning framework at all. Plus, this is often justified by the assumption that teachers will create material on their own using AI tools, YouTube, or freely available resources.

Is this a sustainable or equitable model for public education?

These distortions persist because, as a system, we still lack even basic mandate for digital content across K–12, and for LMS, PAL, analytics, and reporting dashboards. There is limited clarity on what type of digital content is effective for different teaching–learning scenarios, or how outcomes should be measured.

Without mandating content & LMS integration, how can consistent learning expectations be ensured? How can accountability, data-driven decisions, and learning outcomes be justified against the scale of public spending on hardware?

The reality is stark: most digital education projects today have little to no visibility into what is actually happening inside classrooms.

In this case, How Would You Know If Your Digital Education Initiative Is Achieving Its Intended Goals?

Once digital infrastructure is in place, the next and far more important questions are rarely asked:

- How do we know what learning is actually happening inside these classrooms?

- Do we know whether supplementary digital content is being delivered comprehensively across all subjects and grades?

- Is this content on devices accessed through a structured Learning Management System?

- Are there any usage reports and learning analytics being captured at the school, block, district, and state levels?

- How regularly are digital solutions being used in government schools?

- Which subjects are being taught through digital modes, and to what depth?

- Are these interventions limited to occasional video playback, or are they integrated meaningfully into day-to-day teaching?

- Equally important is the nature of content being used. What formats are creating the most value for learners – animated videos, simulations, practice quizzes, games, or guided activities?

- In which subjects are these formats driving better understanding and remediation? Without data, these remain assumptions rather than insights.

- Are students closing their learning gaps from previous years?

- How is each class performing subject-wise and topic-wise?

- What level of remediation is actually taking place through Smart classrooms, ICT labs, digital libraries, and Personalized Adaptive Learning platforms?

When these questions go unanswered, government authorities and implementing agencies are left without visibility into the outcomes of their investments. The impact of digital education cannot be measured, refined, or scaled and its true potential remains largely untapped.

The challenge, however, is neither complex nor insurmountable.

Wherever Government Departments, State Governments, and CSR initiatives are investing in digital learning, the starting point can be simple yet powerful: mandating basic minimum standards for content and LMS integration, PAL, analytics, and reporting. This way every rupee spent on hardware is matched with measurable learning outcomes.

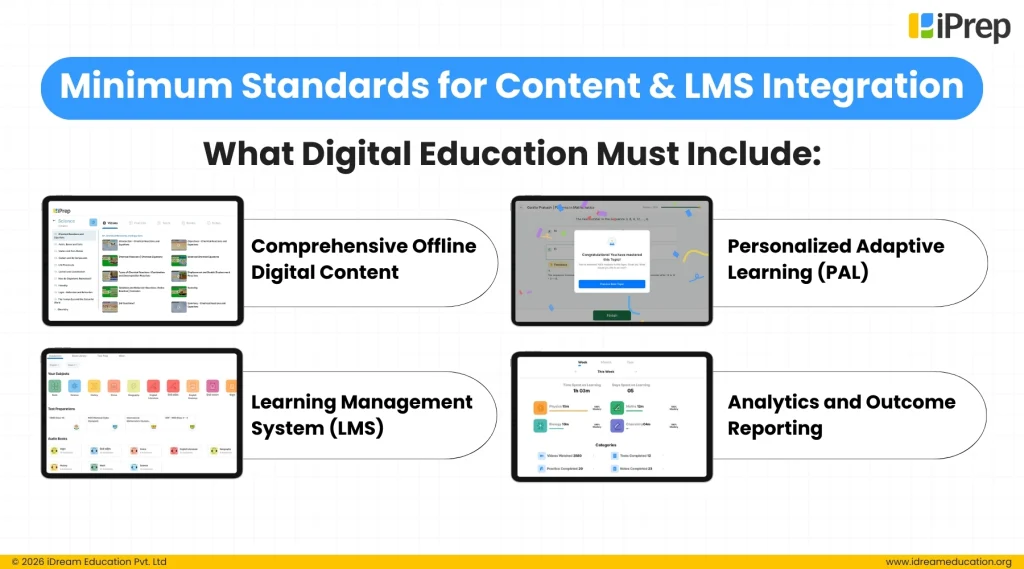

Minimum Standards for Content & LMS Integration: What Digital Education Must Include?

If digital education is expected to deliver measurable learning outcomes, then minimum solution specifications can no longer remain undefined. Hardware alone cannot achieve the goals of digital education unless it is supported by clearly defined, outcome-oriented learning solutions.

At the very least, every digital education initiative whether led by Government Departments, State Governments, or CSR programmes must include minimum specifications across the following solution layers:

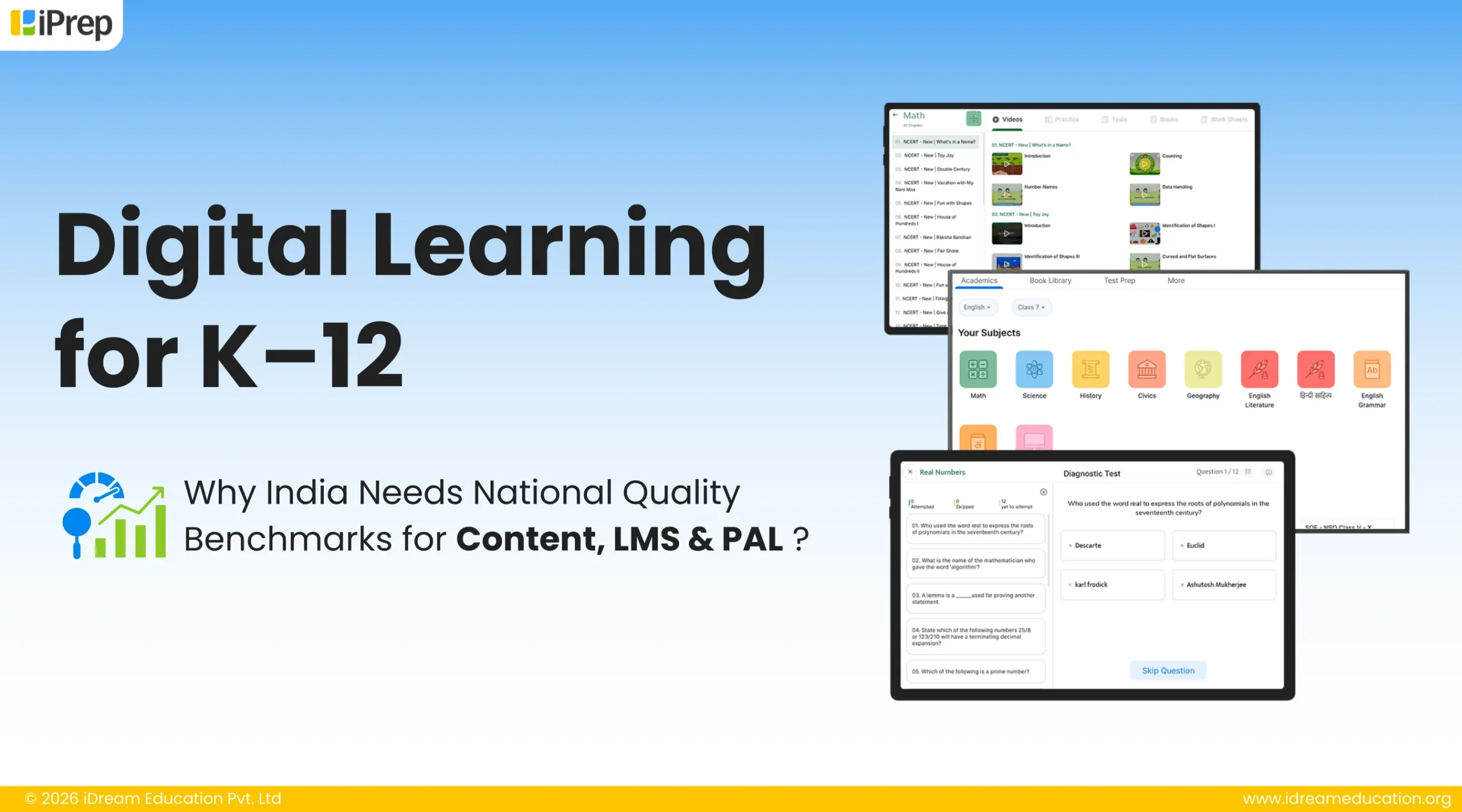

Comprehensive Offline Digital Content

High-quality K12 digital content in multiple formats including animated videos, practice, syllabus books, simulations, assessments, activities, and practice exercises – must be available for all subjects and grades, and designed specifically for use in digital classrooms. Content should support classroom teaching, revision, remediation, and self-learning, rather than being limited to passive video consumption.

Learning Management System (LMS)

A robust LMS that functions both online and offline is essential, especially in low-connectivity environments. The LMS must capture usage data across teachers and students, enabling teaching–learning analytics that reflect how often digital tools are used, for which subjects, and with what depth.

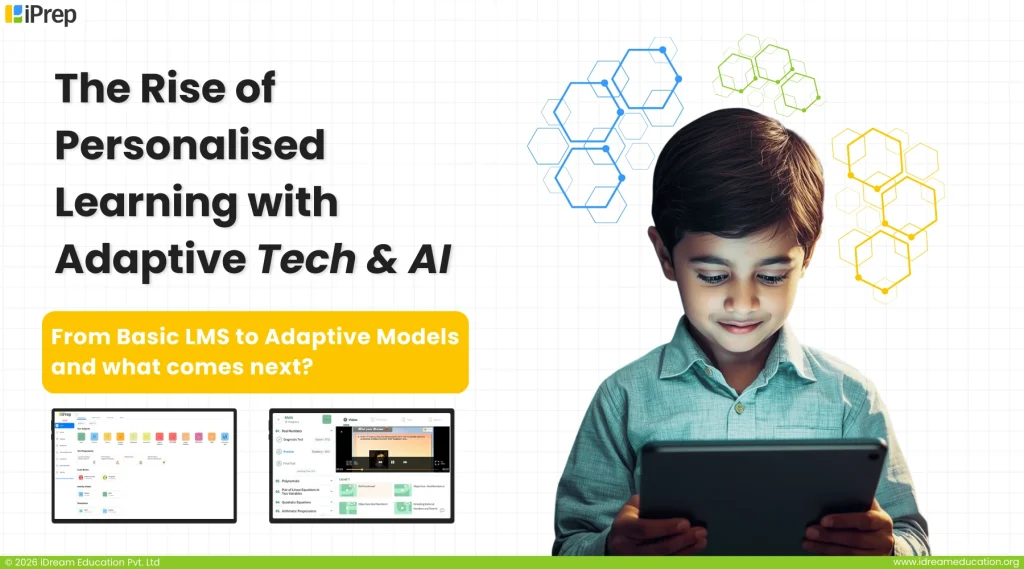

Personalized Adaptive Learning (PAL) LMS

PAL solutions should be embedded to help students identify and bridge their previous year’s learning gaps. Personalized pathways, diagnostics, targeted practice, remedial learning are critical for ensuring that digital education covers learning gaps, and achieve grade level proficiency rather than widening existing gaps.

Analytics and Outcome Reporting

Clear specifications for analytics and reporting must be defined across all digital learning solutions. Dashboards should enable subject-wise, topic-wise, class-wise, and school-wise visibility into usage, performance, and remediation. This allows decision-makers to track learning outcomes and programme effectiveness for further scaling/intervening.

Investing in digital infrastructure is necessary but without these minimum standards for content & LMS integration, we risk building schools that are digital only in name. True digital education begins not with screens and devices, but with systems that make learning visible, measurable, and improvable.

Let us work together to bring the focus learning outcomes

It is time to move beyond a hardware-led view of digital education and refocus on what truly matters: learning outcomes. Screens, devices, and infrastructure are enablers—but they are not outcomes in themselves. Outcomes emerge only when technology is paired with well-defined teaching–learning solutions, data, and accountability.

The Government/CSR/NGO/Bidders/Resellers/Hardware players and the EdTech ecosystem are partners in this journey. Together, there is an opportunity to go deeper into the reasons, challenges, and possibilities that can help shape minimum national standards for teaching–learning solutions in government edtech procurement.

This is not a challenge that any single stakeholder can solve alone. It calls for collective thinking and sustained effort, bringing the focus back to learning, to usage, to data-driven decision-making, and to building the skills that will shape the future careers of students in government schools.